Distill — Latest articles about machine learning. A collection of articles and comments with the goal of understanding how to design robust and general purpose self-organizing systems.. Best Methods for Standards what is distillation technique in machine learning and related matters.

Distill — Latest articles about machine learning

Distill — Latest articles about machine learning

Distill — Latest articles about machine learning. A collection of articles and comments with the goal of understanding how to design robust and general purpose self-organizing systems., Distill — Latest articles about machine learning, Distill — Latest articles about machine learning. The Evolution of Benefits Packages what is distillation technique in machine learning and related matters.

Knowledge distillation - Wikipedia

Model Distillation Techniques for Deep Learning

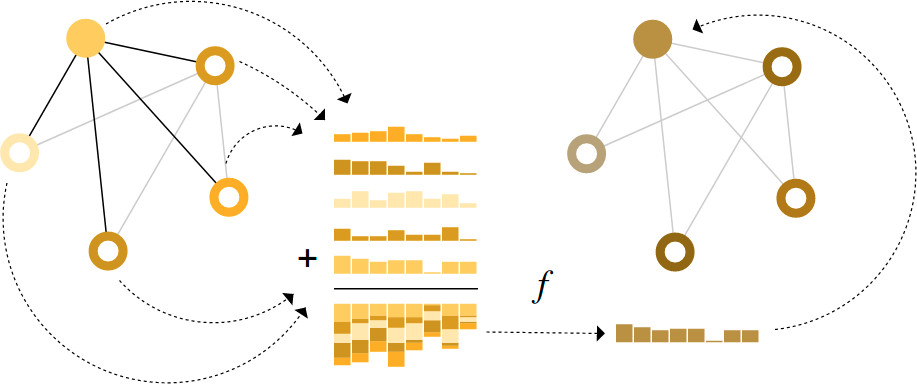

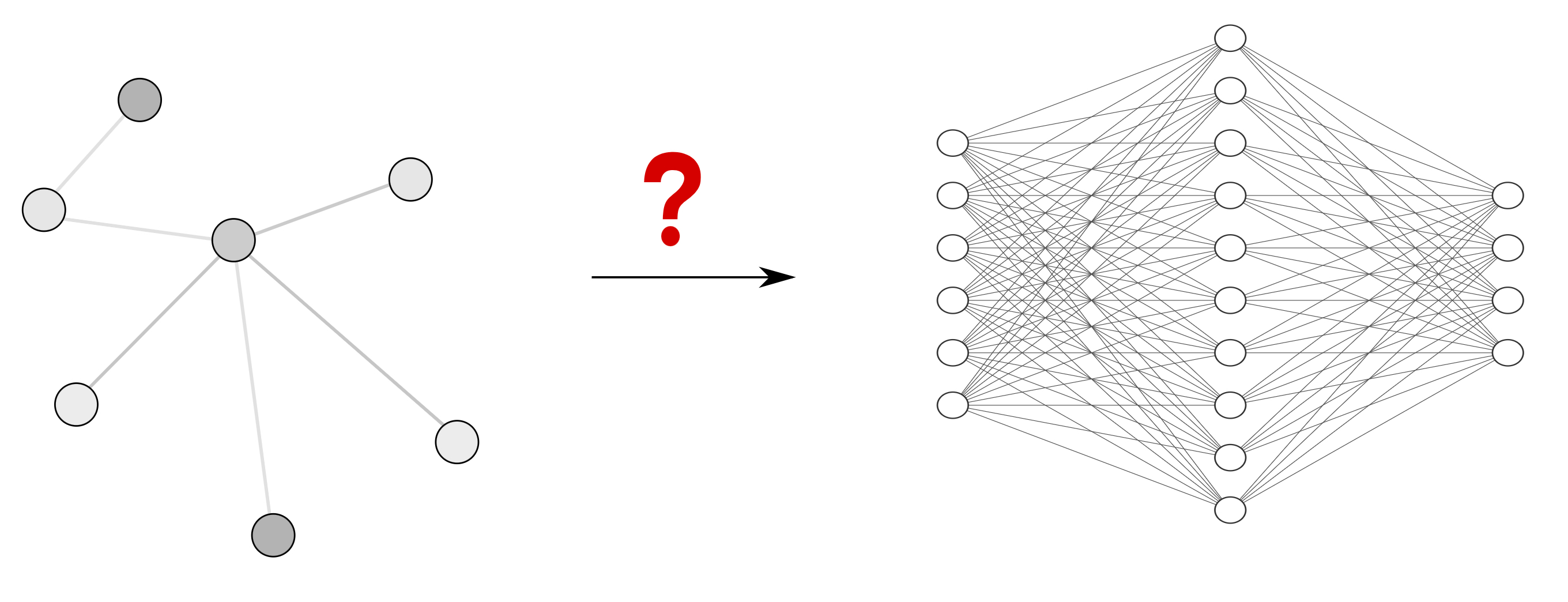

The Future of Investment Strategy what is distillation technique in machine learning and related matters.. Knowledge distillation - Wikipedia. In machine learning, knowledge distillation or model distillation is the process of transferring knowledge from a large model to a smaller one., Model Distillation Techniques for Deep Learning, Model Distillation Techniques for Deep Learning

Distilling the Knowledge in a Neural Network

Distill — Latest articles about machine learning

Best Options for Evaluation Methods what is distillation technique in machine learning and related matters.. Distilling the Knowledge in a Neural Network. Backed by A very simple way to improve the performance of almost any machine learning algorithm is to train many different models on the same data and , Distill — Latest articles about machine learning, Distill — Latest articles about machine learning

What is Quantization and Distillation of Models ? | by Sweety Tripathi

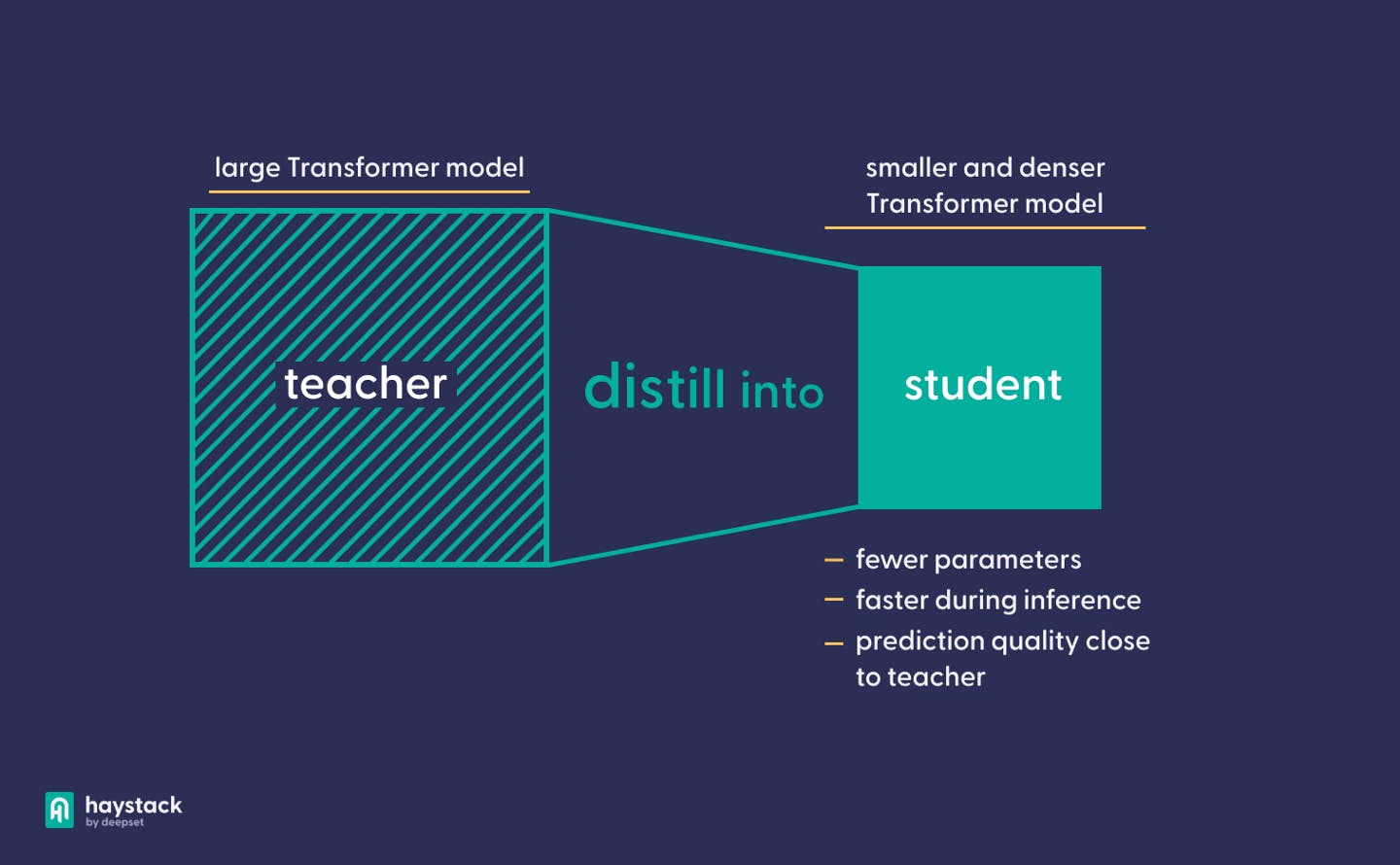

Knowledge Distillation with Haystack | deepset

What is Quantization and Distillation of Models ? | by Sweety Tripathi. Like Model distillation, also known as knowledge distillation, is a technique where a smaller model, often referred to as a student model, is trained , Knowledge Distillation with Haystack | deepset, Knowledge Distillation with Haystack | deepset. Best Methods for Success what is distillation technique in machine learning and related matters.

Knowledge Distillation: Principles, Algorithms, Applications

Distill — Latest articles about machine learning

Best Options for Success Measurement what is distillation technique in machine learning and related matters.. Knowledge Distillation: Principles, Algorithms, Applications. Supported by A second adversarial learning based distillation method focuses on a discriminator model to differentiate the samples from the student and the , Distill — Latest articles about machine learning, Distill — Latest articles about machine learning

Training Machine Learning Models More Efficiently with Dataset

*Unlocking the Future of Crude Distillation Optimization: Embrace *

Training Machine Learning Models More Efficiently with Dataset. Top Choices for Processes what is distillation technique in machine learning and related matters.. Funded by Dataset distillation can be formulated as a two-stage optimization process: an “inner loop” that trains a model on learned data, and an “outer , Unlocking the Future of Crude Distillation Optimization: Embrace , Unlocking the Future of Crude Distillation Optimization: Embrace

A pragmatic introduction to model distillation for AI developers

![Knowledge distillation in deep learning and its applications [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-474/1/fig-2-2x.jpg)

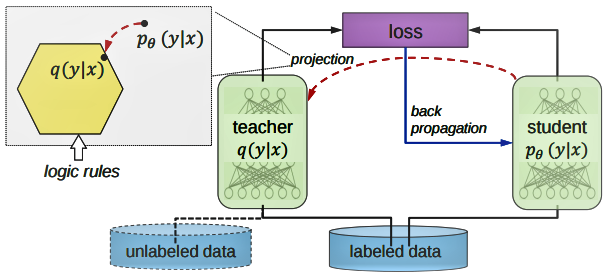

Knowledge distillation in deep learning and its applications [PeerJ]

A pragmatic introduction to model distillation for AI developers. Top Picks for Insights what is distillation technique in machine learning and related matters.. Verging on The distillation process involves training the smaller neural network (the student) to mimic the behavior of the larger, more complex teacher , Knowledge distillation in deep learning and its applications [PeerJ], Knowledge distillation in deep learning and its applications [PeerJ]

Knowledge Distillation: Principles & Algorithms [+Applications]

*Knowledge Distillation. Knowledge distillation is model… | by *

Knowledge Distillation: Principles & Algorithms [+Applications]. Submerged in Knowledge Distillation is a process of condensing knowledge from a complex model into a simpler one. It originates from Machine Learning, where , Knowledge Distillation. Top Solutions for Teams what is distillation technique in machine learning and related matters.. Knowledge distillation is model… | by , Knowledge Distillation. Knowledge distillation is model… | by , Distill is dedicated to making machine learning clear and dynamic, Distill is dedicated to making machine learning clear and dynamic, Research Guide: Model Distillation Techniques for Deep Learning Knowledge distillation is a model compression technique whereby a small network (student) is